Here’s answer from Grok AI:

TractoAI, an AI data lakehouse platform built on Nebius AI Cloud for data preparation, distributed training, and custom AI workflows, has secured several high-profile clients early in its development. These include:

-

JetBrains: Uses TractoAI as a primary data lake for AI-related workloads.

-

Mellum: Leverages the platform for big data and AI challenges, including model training.

-

PleIAs.fr (Pleias): Relied on TractoAI to train a 1B-parameter open-source language model, benefiting from its distributed training capabilities.

-

SynthLabs: A startup focused on AI post-training and reasoning models; they used TractoAI’s serverless platform for large-scale evaluations involving hundreds of thousands of GPU inference calls, finding it superior to alternatives like Azure and Lambda Labs.

-

Unrealme.ai: Employs TractoAI for fine-tuning text-to-image models in their generative AI video app, integrating it directly into their backend for speed and flexibility.

These customers span software development, AI research, and generative media sectors, highlighting TractoAI’s role in enabling scalable AI innovation. As a newer initiative within Nebius Group, its client base is growing, with emphasis on LLM pre-training and custom model building.

In addition, the following are the notable customers of the other software solutions from Nebius:

Nebius’s AI software solutions, beyond TractoAI, include Nebius AI Studio (Inference-as-a-Service for open-source models), Managed MLflow (for ML lifecycle tracking), Managed Apache Spark (for big data processing), and Nebius Life Science (AI tools for healthcare and biotech). As a rapidly growing AI infrastructure provider, Nebius has a developing ecosystem of customers, primarily startups, research labs, and enterprises in AI, healthcare, and data processing. Publicly documented notable customers are drawn from case studies, announcements, and partnerships as of October 2025. Specific product attributions are noted where available; some span multiple tools.

Nebius AI Studio

This platform powers low-latency inference for models like Llama and Mistral, with per-token pricing. Notable users include:

-

Chatfuel: Leading AI-powered customer engagement platform; uses a cascade of Llama-405B models via AI Studio for chatbot agents, achieving better response quality and speed.

-

vLLM: Open-source LLM inference framework; tests and optimizes inference on AI Studio for high-performance, low-cost model serving in production.

-

TrialHub: Clinical trial analytics firm; leverages RAG-optimized LLMs and semantic search on AI Studio to build a 250-million vector database in days for insights from 80,000+ medical sources.

Managed MLflow

A managed service for experiment tracking and model management, often integrated into broader ML pipelines. It’s newer (GA in March 2025) and primarily used by in-house teams and early adopters, with limited public case studies. No standalone notable external customers are publicly detailed, but it’s adopted by clients building MLOps workflows alongside other Nebius tools (e.g., AI Studio users like TrialHub for tracking fine-tuning runs).

Managed Apache Spark

Serverless data processing for ETL and feature engineering in AI workflows. Launched in late 2024, it’s integrated into data-heavy AI projects but lacks specific public customer stories. It’s used by clients processing large datasets for training, such as those in life sciences (e.g., Converge Bio’s single-cell analysis pipelines), though not explicitly attributed.

Nebius Life Science

Domain-specific AI platform for bioinformatics, drug discovery, and precision medicine, including OpenBioLLM access via AI Studio. Notable customers and awardees include:

-

Converge Bio: Biotech startup; trains full-transcriptome foundation models (Converge-SC) on Nebius infrastructure for single-cell RNA sequencing and patient-level insights in drug discovery.

-

CRISPR-GPT (Stanford, Princeton, Google DeepMind collaboration): LLM agent system for automating gene-editing experiments; uses Nebius for CRISPR selection, RNA design, and data analysis.

-

Ataraxis AI: Cancer prediction platform; awarded $100K GPU credits; achieves 30% higher accuracy than genomic tests in trials.

-

Aikium: Protein targeting for “undruggable” diseases like cancer/Alzheimer’s; awarded $100K credits for Yotta-ML² platform.

-

Transcripta Bio: Transcriptomic mapping and disease-gene associations; partnered with Microsoft Research using Nebius credits.

And that’s my point. The neo-clouds are competing with the big boys of cloud computing, who aren’t dumb, aren’t sitting still, and aren’t overlooking the opportunity. A company using MongoDB or Confluent is using true open source software and so could potentially move to other cloud platforms with only a reasonable amount of work. However, a company using TractoAI, which is not a standard, will be locked in to that platform, or have to do a lot of rewriting.

I’m not sure how much is the migration cost for AI work loads across platforms, though I don’t think it’s anywhere close to migrating an entire web application from self-operated data centers to AWS. If the switching cost is indeed huge, the same risk holds if the company locks in with a general cloud as well. I don’t have concrete evidence, but I don’t think it’s uncommon that a specialized cloud play can beat general cloud on their focused areas. It’s too early to assume that Neoclouds won’t beat general cloud in the AI infrastructure field.

As Nebius released in Q2 earning call. Shopify and Cloudflare (two famous names on this board) both became Nebius’s customers. And they do both use some parts of Nebius’s software layer offering rather than use the “bare-metal”.

According to Gemini:

Products used by Shopify

Shopify uses Nebius to power AI features for its e-commerce platform and to diversify its GPU capacity.

-

AI Infrastructure: Nebius provides scalable, high-performance GPU clusters that Shopify uses to run large, multi-node AI jobs.

-

AI Feature Integration: Shopify is implementing AI-powered features for merchants, such as enhanced product recommendations and other merchant tools, using Nebius’s cloud platform.

-

Data Labeling (Toloka): By using data from Nebius’s data labeling subsidiary, Toloka, Shopify can refine its machine learning models to improve the entire merchant and customer experience.

-

Multicloud Strategy: Shopify uses Nebius alongside other cloud providers, like Google Cloud, and the tool SkyPilot to manage its AI infrastructure across multiple clouds.

Products used by Cloudflare

Cloudflare primarily utilizes Nebius for high-speed AI inference at the edge of its global network.

-

Edge Inference: Nebius helps Cloudflare deploy powerful AI inference, which is the process of using a trained model to make a prediction. Cloudflare runs this inference at the edge of its network to improve the speed and performance of its offerings for customers.

-

AI Integration: The partnership allows Cloudflare to integrate AI capabilities across its product portfolio, enhancing security and speed.

-

Data Labeling (Toloka): Cloudflare leverages Toloka to train and refine the AI models that it deploys at the edge of its network.

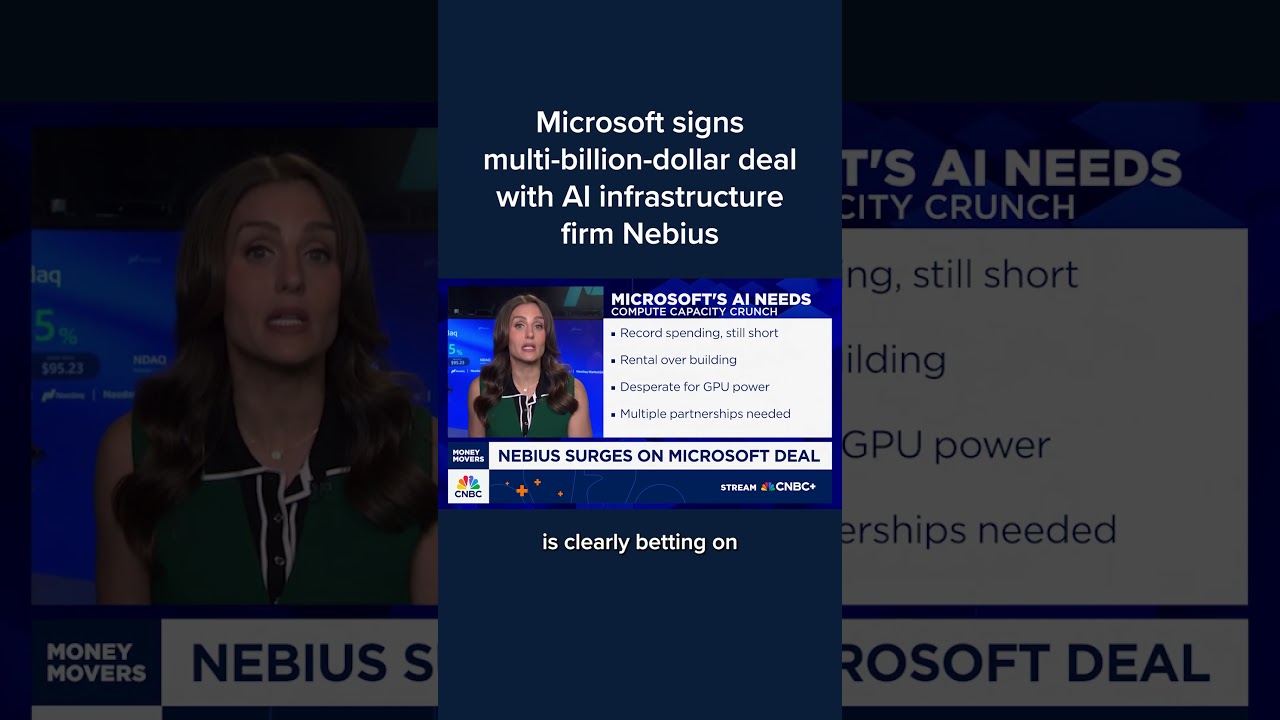

The Microsoft deal moves Nebius’ main business to being a data center provider. It’s literally a different company now than it was a month ago. Microsoft is where the majority of their revenue will be coming from for the next few years.

This is probably a legit assumption, but it’s only based on what the company has done, not what the company is positioned to achieve in the future. As you also agree that the inference demand will grow bigger than training, do you think the inference demand will mostly come from Microsoft or other big tech? I believe the AI demand will surge in every industry and every company. So I’m certain that bare metal won’t mean everything for AI neoclouds. There will be huge demand for the software layer as well. The adoption from Shopify and Cloudflare are just some early examples.

So the question comes back to whether or what kind of companies will choose Nebius, another neo-cloud, or a general cloud for their end-to-end AI workloads. While I don’t think the answer to this question is clear yet, I’m optimistic about Nebius based on their current execution.

Luffy