Earlier today, I posted an article from the Wall Street Journal that showed the circular deals of AI companies with each other.

I followed up to see how much actual end-user consumer spending compares with the AI build-out spending.

The AI companies are buying and selling actual goods and services to each other as well as investing in each other.

Nvidia and AMD sell chips. Oracle sells computers. CoreWeave is an AI-cloud infrastructure company that rents out data-center capacity. CoreWeave’s biggest customer is Microsoft, which is an investor in OpenAI, shares revenue with OpenAI, buys chips from Nvidia and has partnerships with AMD.

When Morgan Stanley analysts in an Oct. 8 report mapped out the AI ecosystem to show the capital flows between six companies — OpenAI, Nvidia, Microsoft, Oracle, Advanced Micro Devices and CoreWeave — the arrows connecting them resembled a plate of spaghetti.

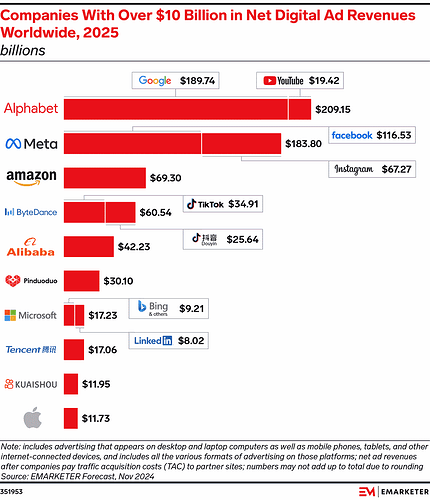

The problem is that they are selling goods and services and making capital deals with each other. The expected investments into AI infrastructure, developement and business-to-business spending is expected to be orders of magnitude higher than end-user consumer spending on AI services.

According to Google Gemini (which is based on an article I read last week so I know it’s true):

One analysis estimates that new AI data centers built in 2025 will incur $40 billion in annual depreciation while generating only $15 to $20 billion in total revenue (combining both enterprise and consumer) for the owners. The revenue would have to grow by tenfold or more just to cover the depreciation and achieve a target return on capital…

The AI build-out is so massive that the AI-related capital expenditures (i.e., the cost to build the capacity) have been reported to have contributed more to U.S. GDP growth in the first half of 2025 than all traditional consumer spending combined.

This illustrates a fundamental economic dynamic: the current growth is fueled by companies spending huge sums on infrastructure (CapEx), not by the economic activity generated by end-user purchases (consumption)… [end quote]

It’s worth reading my questions and Gemini’s answers in full.

https://gemini.google.com/app/5b59f7597eea1988

The stocks of the tech companies in question all are grossly overvalued by traditional standards even if all their revenues led to actual end-user spending. The investments in AI resemble the investments made in internet infrastructure before the 2000 dot-com bubble burst.

This is very scary to me. If (when) the bubble collapses it will drag down GDP in addition to the stock market.

Wendy