https://overclock3d.net/reviews/gpu_displays/intel-murdered-amd-with-its-arc-b390-mobile-graphics/

Oh dear. This is news I didn’t want to see any time soon:

Compared to AMD’s HX 370 (at 54W sustained), Intel’s Ultra X9 388H CPU (at 45W sustained) delivered 73% more performance on average. In some instances, Intel achieved double the gaming performance. This was using 1080p high setting with upscaling. To say the least, Intel appears to have smashed AMD. Radeon’s integrated graphics weren’t even close to Intel’s. AMD, the once leader of the mobile gaming market, has been trounced by Intel. Did anyone put that on their 2026 bingo card?

What’s AMD’s response - do they have anything in the works and near to release?

There is a pretty big difference in both process technology and design. the HX370 is a monolithic die on TSMC N4. OTOH, Panther lake is a chiplet design that uses Intel_18A for the compute die, and TSMC N3 for the GPU. The base panther lake has 4 XE GPU units, while the beast that beats AMD has 12, so a very large GPU die. Panther lake came later, but indeed is better.

Ok, thanks. So I’ll presume that Lisa Su already has a plan to best this Intel design, hopefully sooner rather than later.

At CES AMD announced the 400 series laptop chips, which are a minor improvement on the previous generation. Anybody who wants serious GPU power for gaming is going to use an add on GPU anyway, so I view AMD as still very competitive here.

Ok, cool. ………………………………

AMD’s response looks like this:

Disappointing that they don’t seem to plan for now to put RDNA 4 in their integrated graphics. I might have thought that the followon to Strix Halo would get it or something but apparently not. ![]() TBD what impact this Intel move will have in the market. I know AMD has been talking towards the end of the need for discrete graphics in notebooks, but now it looks like Intel is closer to that goal.

TBD what impact this Intel move will have in the market. I know AMD has been talking towards the end of the need for discrete graphics in notebooks, but now it looks like Intel is closer to that goal.

Server First, then mobile, then desktop. For those who’ve been at this long enough, those words will resonate. ***The money is in the datacenter.

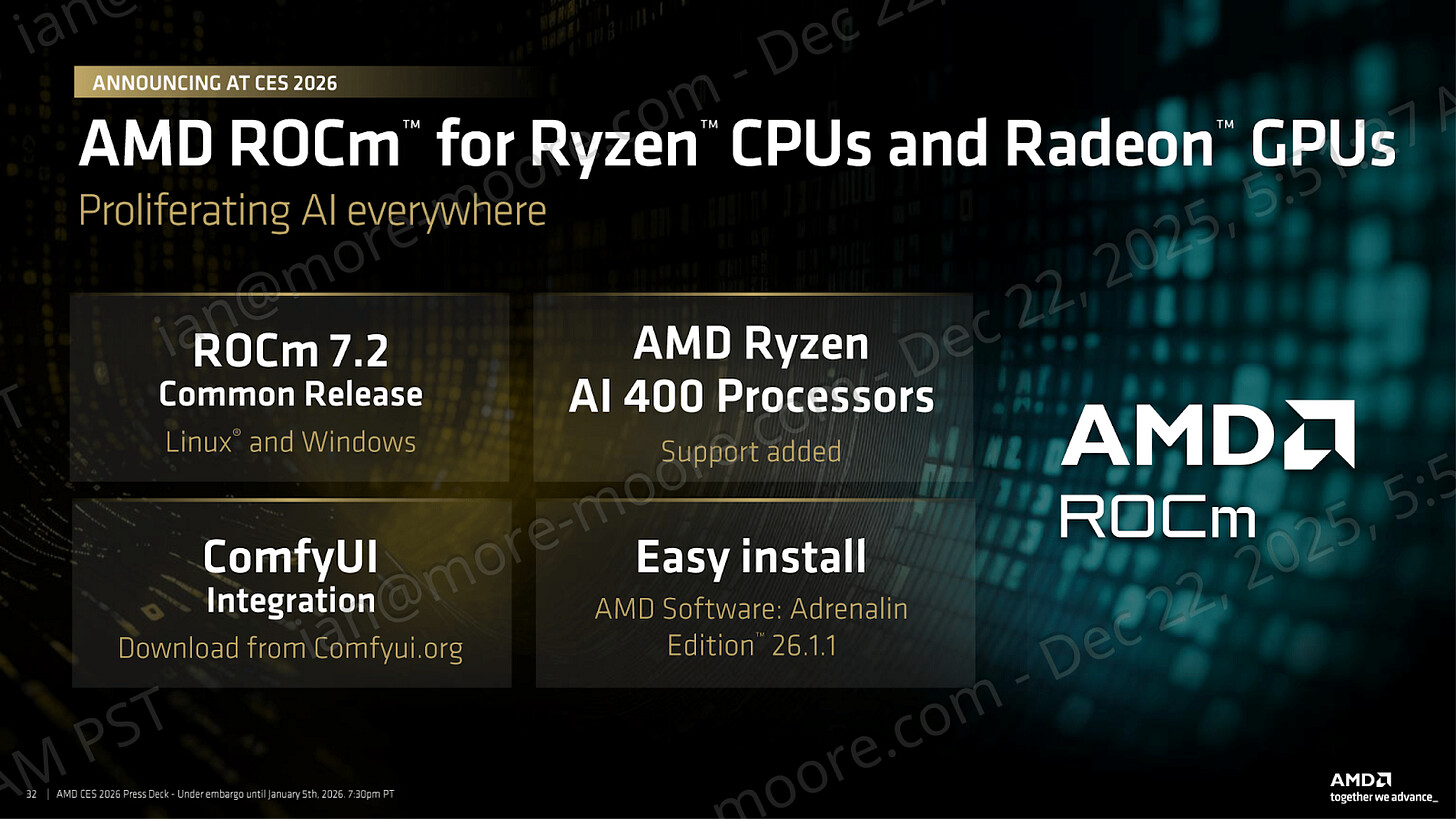

Another take gets more into AMD’s AI efforts, including both chips and the ROCm software layer:

AMD is using CES to re-stack its lineup around the AI Everywhere narrative, positioning Ryzen AI as the default engine across the portfolio rather than a premium tier bolted on at the top. The goal is streamlining: modest hardware lifts, certification box-ticking, and pushing AI capability into lower-priced systems so OEMs can ship volume designs that meet Microsoft’s requirements. It generates noise, but it also signals that this cycle is about reinforcing AMD’s existing platform rather than resetting it.

The timing of these announcements also reflects where the PC market sits today. The initial “AI PC” shock-and-awe phase has passed, and the market has moved into a procurement and refresh cycle where buyers, OEMs, and Microsoft care less about halo products and more about consistent qualification across price bands. That dynamic incentivizes platform continuity over betting a cycle on a single architectural headline.

AMD’s announcements also come across like a defensive move against Intel’s ability to flood OEM designs with tightly segmented SKUs. Right now we’re writing this ahead of CES as part of a news embargo, without any knowledge of what Intel is going to bring to the market at the show, but AMD’s answer here is to ensure it has a comparable ladder, keeping its products and the Zen 5 microarchitecture relevant in the minds of consumers.

This matters because a mid-cycle refresh such as this one often aims to improve price to performance on a now-mature platform. When the architecture remains broadly the same, and the platform story is similar, the differentiators come down to competitiveness. All in all, it signals whether its ‘AI PC’ positioning translates into the systems people can actually buy in volume.

…

In 2026, AMD looks to show multiple configurations and designs that OEMs will be implementing AI Max+. This ranges from notebooks to mini PCs, even with an all-in-one PC design encompassing a screen; this shows SKU versatility, but it all comes down to volume and how vendors want to utilize the SKUs in their designs. Whether we see vendors ship the big unified memory variants remains to be seen compared to launch systems, but AMD is clearly signaling that Max+ is meant to be a platform tier that can be scaled across form factors; and it it expects that the mini workstation and mini-PC categories to be perhaps one of its primary homes for the new big iGPU plus unified memory design proposition.

One of the other announcements as part of this is a new ‘Ryzen AI’ workstation. Given the popularity of hardware like the Mac Mini/Mac Studio and NVIDIA’s DGX Spark, rather than showcase a wide array of options from its partners, AMD is building a reference design as a Spark competitor, on Ryzen AI Max+, called the Ryzen AI Halo Developer Platform.

AMD hasn’t provided any details aside from the two images, and we’re expecting AMD CEO Lisa Su to show it off at the CES keynote today (it might already be passed if you’re reading this as we publish). In order to compete with Spark, we’re expecting it to have some form of enhanced networking beyond a 10 GbE port, so perhaps something Pensando based. AMD will no doubt highlight that the fact it is x86 based for AI development would be a big plus for developers. The only other note we got on this in advance is ‘expected in Q2 2026’.

The thing is, one of the key differentiators AMD wants you to focus on with Ryzen AI Max+ is on memory capacity, not the TOPS. AMD is leaning into the idea that Max+ can enable a class of local AI inference and creation workflows by pairing a large integrated graphics configuration with the ability to feed up to 128 GB of unified memory into the equation. Opting for a unified coherent memory structure allows the CPU and GPU to share one memory pool concurrently, and allows access to the same data structures without requiring software to manage things and having to manually keep copies synced. Giving coherence to memory offers a practical upside for the integrated GPU as it can use far more than typical discrete-level graphics VRAM memory capacity; it can draw directly from system memory without specific hard boundaries and the same duplication overhead.

…

If FSR Redstone is AMD’s gaming proof point that its software can move the needle in a very thin and quiet GPU news cycle, then ROCm is basically the same from the playbook, this time pointed at local AI. AMD is explicitly trying to frame ROCm more directly than previously, with ROCm 7.2 being positioned as a single and common release across both Windows and Linux. It also frames another message within its marketing. This is especially true surrounding support for the refreshed Ryzen AI 400 processors. This leans more into giving credence to ROCm being somewhat new during AMD’s mid-cycle CES 2026 announcements. This is because ROCm has been given a lot of column inches and has made ground within the industry in recent times in notability. AMD is tying ROCm directly to the new mobile stack and leans into Windows as a first-class target as opposed to being a side quest for them.

AMD backs this positioning with kind of a ‘continuous performance uplift’ flavoring as you would expect from a software led narrative. Here AMD frames ROCm as showing repeatable gains across both the Ryzen and Radeon product stack rather than a static looking toolkit. AMD claims up to a 5x uplift in ROCm 7.1 compared to ROCm 6.4, illustrating the point with examples such as SDXL and Flux S. In these cases, AMD highlights multi-fold gains on both Ryzen AI Max processors and Radeon AI Pro GPUs, reinforcing its message that ROCm is an actively improving software stack where performance advances arrive through iteration rather than new silicon.

One important part to pull out here is that AMD is deliberately using popular and recognizable generative AI based workflows acting as proof points to get its messaging across. AMD even flags that ComfyUI integration is available now and is downloadable from the source, which AMD looks to be dragging ROCm out of the abstract and into tools people can actually touch.